Guides & Articles

Introduction to Color Spaces in Video

What is color space?

Color space is a mathematical representation of a range of colors. When referring to video, many people use the term “color space” when actually referring to the “color model.” Some common color models include RGB, YUV 4:4:4, YUV 4:2:2, and YUV 4:2:0. This page aims to explain the representation of color in a video setting while outlining the differences between common color models.

How are colors represented digitally?

Virtually all displays—whether TV, smartphone, monitor, or otherwise—start by displaying colors at the same level: the pixel. The pixel is a small component capable of displaying any single color at a time. Pixels are like tiles on a mosaic, with each pixel represents a single sample of a larger image. When properly aligned and illuminated, they can collectively be presented as a complex image to a viewer.

While the human eye perceives each pixel as a single color, every pixel is actually made up of the combination of three subpixels colored red, green, and blue.

By combining these subpixels in different ratios, different colors can be obtained.

8-bit vs 10-bit color

YUV or YCbCr color space

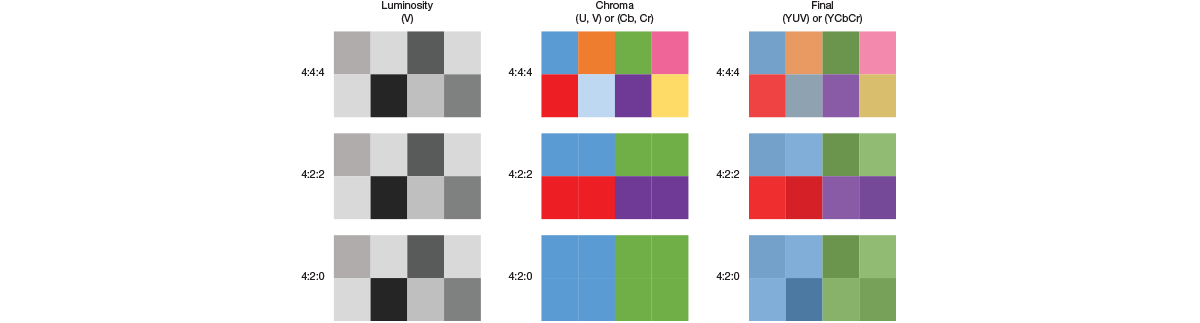

YUV color space was invented as a broadcast solution to send color information through channels built for monochrome signals. Color is incorporated to a monochrome signal by combining the monochrome signal (also called brightness, luminance, or luma, and represented by the Y symbol), with two chrominance signals (also called chroma and represented by UV or CbCr symbols). This allows for full color definition and image quality on the receiving end of the transmission.

Storing or transferring video over IP can be taxing on network infrastructure. Chroma subsampling is a way to represent this video at a fraction of the original bandwidth, therefore reducing the strain on the network. This takes advantage of the human eye’s sensitivity to brightness as opposed to color. By reducing the detail required in the color information, video can be transferred at a lower bitrate in a way that's barely noticeable to the viewers.

YUV 4:4:4

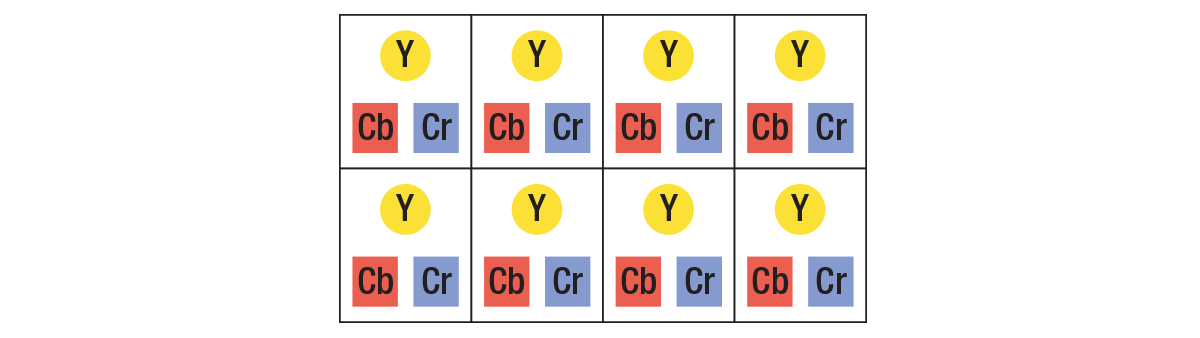

4:4:4 full color depth

Full color depth is usually referred to as 4:4:4. The first number indicates that there are four pixels across, the second indicates that there are four unique colors, and the third indicates that there are four changes in color for the second row. These numbers are unrelated to the size of individual pixels.

Each pixel then receives three signals, one luma (brightness) component represented by Y, and two color difference components known as chroma represented by Cr (U) and Cb (V).

YUV subsampling

Subsampling is a way of sharing color across multiple pixels and using the eye and brain’s natural tendency to mix neighboring pixels. Subsampling reduces the color resolution by sampling chroma information at lower rate than luma information.

YUV 4:2:2 vs. 4:2:0

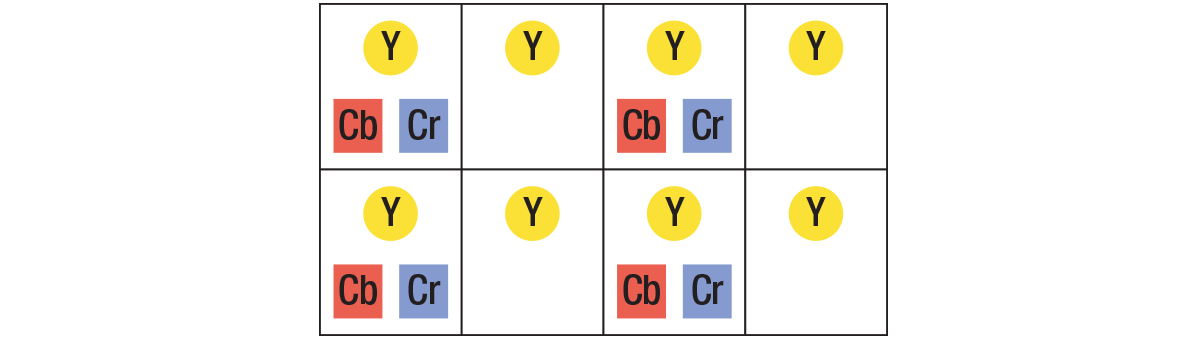

4:2:2 subsampling implies that the chroma components are only sampled at half the frequency of the luma:

The chroma components from pixels one, three, five, and seven will be shared with pixels two, four, six, and eight respectively. This reduces the overall image bandwidth by 33%.

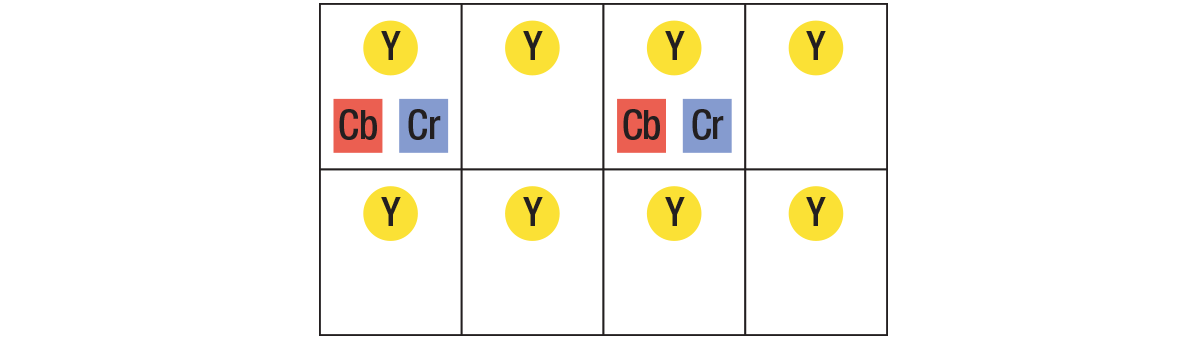

Similarly, in 4:2:0 sub-sampling, the chroma components are sampled at a fourth of the frequency of the luma.

The components are shared by four pixels in a square pattern, which reduces the overall image bandwidth by 50%.

Several other chroma subsampling methods exist, but these are the two principles of chroma subsampling reducing the image bandwidth by reducing the pixel color sampling frequency remains the same.

The image below details how a 4x2 pixel region is represented in 4:2:0 and 4:2:2 subsampling.

In the example below, the three frames (one luminosity and two chromas) can be combined to create the final colored image:

In such cases, the incoming signal will only have a luma (Y) component, and no chroma components (U or V).

Subsampling size saving

With 8 bits per component,

- In 4:4:4, each pixel will require three bytes of data (since all three components are sent per pixel).

- In 4:2:2, every two pixels will have four bytes of data. This gives an average1 of two bytes per pixel (33% bandwidth reduction).

- In 4:2:0, every four pixels will have six bytes of data. This gives an average of 1.5 bytes per pixel (50% bandwidth reduction).

| Sub-sampling | Average size per pixel | Bandwidth required for HD60 content | Bandwidth required for UHD60 content |

|---|---|---|---|

| 4:4:4 | 3 bytes (24-bit) | 373.25 Mb/s | 1.49 Gb/s |

| 4:2:2 | 2 bytes (16-bit) | 248.83 Mb/s | 995.32 Mb/s |

| 4:2:0 | 1.5 bytes (12-bit) | 186.62 Mb/s | 746.50 Mb/s |

When to use chroma subsampling and when to avoid?

Chroma subsampling is a useful method to use for natural content, where lower chroma resolution isn't noticeable.

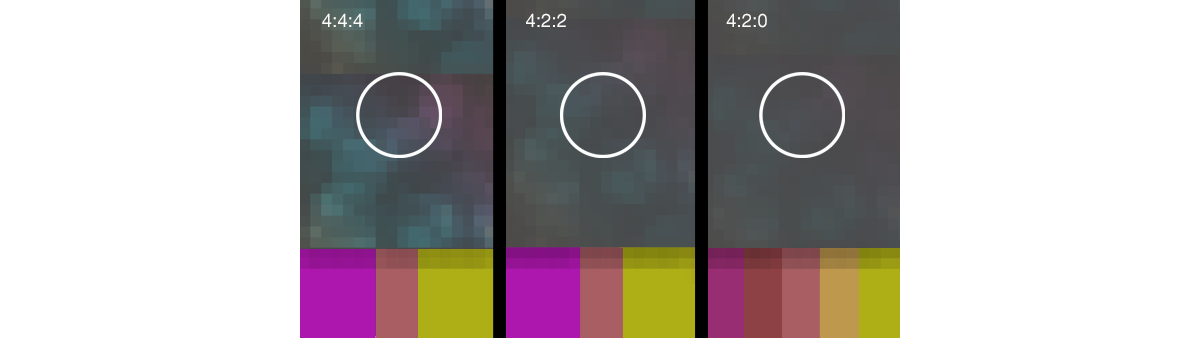

On the other hand, for complex and precise synthetic content (for example, CGI content), full color depth is needed to prevent visible artifacts (edge blurring), since the pixel precise content may exacerbate them.

The images below show how CGI data can be impacted by subsampling.

The finer details are lost when this image is displayed using chroma subsampling. This can be dangerous in mission-critical environments where key decisions are made based on the presented data. When sampling text at 4:2:2 or 4:2:0, then the quality will drop making said text increasingly difficult to read.

When choosing products for video walls, for example, it's crucial to choose technologies that allow versatility with regards to color space. Take a control room for instance. Part of the control room wall may display charts or graphs where every detail matters. In this case, a capture, encoding, decoding, and display product which has the capability to handle 4:4:4 is better suited. On the other hand, if watching a feed of high motion content, say a sports event, then the overall network bandwidth could be reduced by having this video play at 4:2:0. Versatility is key when choosing products for capture, streaming, recording, decoding, and display as it allows the user to reach a wider range of functionality.

While the output image will look very similar, and the bandwidth required to transfer the image will be the same2, the storage and transfer of data will differ between the two.

RGB will transmit content with a predetermined color depth per component. This means that each of the R, G, and B will contain data for each of the red, green, and blue color components respectively to collectively formulate the overall color of each pixel.

YUV, on the other hand, will transmit each pixel with the associated luma component, and two chroma components.

Color space conversion

It's possible to convert between RGB and YUV. Converting to YUV and using subsampling when appropriate will help reduce the bandwidth required for this transmission.

1. This is an important distinction. Although the average size of the pixel is two bytes, some pixels will be three bytes, and some will be one byte, depending on whether chroma was sampled for a given pixel.

2. This assumes that the RGB color depth and the YUV size per component are the same.

Matrox technologies

Encoders & Decoders

Deliver, decode, and display up to four 4K streams over LAN, WAN, or internet—with as little as 50 ms of latency.

IP KVM extenders

Deliver 4Kp60 4:4:4 or up to quad 1080p60 4:4:4 video support over a one GbE network—at the lowest bitrates

Video wall products

Provide unprecedented capture, encoding, streaming, recording, decoding, display, and control capabilities.